Some time ago the github repo has been prepared to run tests on travis-ci and appveyor. At the same time, it was configured to ship coverage reports to https://codecov.io site.

A little bit of introduction

- coverage is a metric (sometimes not as precise as I'd wish) too see how many parts of a piece of code gets tested

- Web2py ATM includes tests only for gluon code, not for gluon/contrib modules.

- we leverage travis-ci and appveyor to test every commit against python 2.5, 2.6, 2.7 and pypy

- web2py extrapolated pydal in a separate repo, but the same considerations apply...code runs for SQLite, MySQL,Postgresql,mongodb,GAE on travis-ci and mssql on appveyor

Here I'll explain how you can replicate what happens on travis-ci and on codecov.io with your python interpreter.

Web2py features

Web2py executes tests simply with ./web2py.py --run_system_tests . A few of them are run on pydal just to test if something breaks with the integration. Extensive pydal tests are instead run within the separate project

By default, tests are run against a "sqlite:memory" database. To force both web2py and pydal tests to happen on a particular database, you need to set an environmental variable “DB” that is the uri of your database.

E.g.: given that you'd like to run your tests on your own postgresql instance, you'd just need to start a shell and

cd path/to/web2py export DB=postgres://username:password@localhost/test ./web2py.py --run_system_tests # for web2py # python -m unittest -v tests # for pydal

If you're on Windows, instead of “export” you should type “set”

NB: your database should be already there and EMPTY (meaning, no tables in it)

To enable coverage reports, you should have the coverage module installed

pip install --upgrade coverage

Again, web2py smooths out lots of things for you:

cd path/to/web2py

./web2py.py --run_system_tests --with_coverage # for web2py

# or

coverage run -m unittest -v tests # for pydal

will run tests and measure coverage for you.

There's a default coverage.ini file right in gluon/tests and a .coveragerc in the root of pydal.

PS: To set your own configuration, just set the env variable COVERAGE_PROCESS_START pointing to the path.

Given all web2py defaults, after running tests with coverage, you'll see a new file in the web2py path that is called roughly “.coverage.something” . Those file are holding all the “raw” data for the coverage report.

Now we should build a “readable” form of this “raw report”.

NB: by default coverage collects all available coverage “runs” to form a single report. It's best to delete all those files, run the tests and generate a report containing only the results from the latest run.

Usually the coverage config is in a file called .coveragerc. We have it in gluon/tests/coverage.ini, so we need to supply the path to the config file. To do this, coverage ships with nice helpers.... so, by default, you should do

cd /path/to/web2py coverage erase #deletes previously collected data ./web2py.py --run_system_tests --with_coverage coverage combine # sums up all coverage reports generated coverage html --rcfile=gluon/tests/coverage.ini # creates a nice html report

Once you created the html report, you can navigate to /path/to/web2py/coverage_html_report/index.html to see it.

That report closely resembles what you have on https://codecov.io/github/web2py/web2py . If you click on a file you'll see what lines are covered by tests and what lines are not (red ones).

How to improve coverage

Choose a piece of code that isn't tested (all the red lines aren't) and figure out a way to write a test for them. Unfortunately if you're not familiar with unittests you'll need to spend a little bit of time to learn the syntax. Existing tests in gluon/tests can definitely help you out in the process: it's not **that** hard :-P

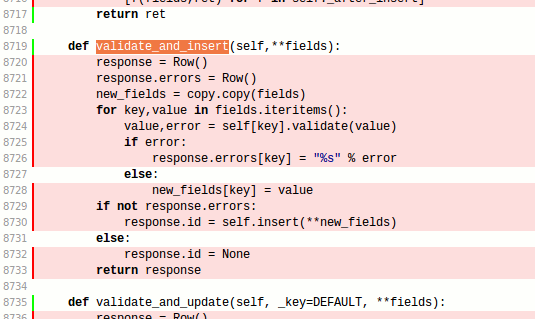

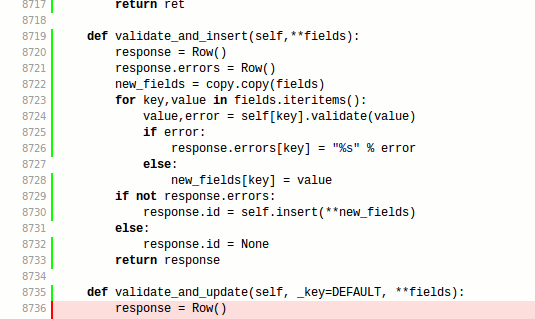

Let's say I'd like to improve tests for pydal's validate_and_insert() function (ATM untested).

Tests are unittests included in gluon/tests/* (or tests/ for pydal).

NB: pydal tests are a little bit different from any other, in the sense that every tests finds an EMPTY database and should leave the database EMPTY when it's finished. So, all pydal tests REQUIRE that you use as a uri the variable DEFAULT_URI (is the one that gets used when you pass the DB env variable), and that you remember to .drop() your tables at the end, so that your tests will never get in the way with other tests.

Use meaningful class names for tests and comment as much as you can to help others see why you built that tests and to verify what condition.

class TestValidateAndInsert(unittest.TestCase):

def testRun(self):

import datetime

from gluon.validators import IS_INT_IN_RANGE

db = DAL(DEFAULT_URI, check_reserved=['all'])

db.define_table('val_and_insert',

Field('aa'),

Field('bb', 'integer',

requires=IS_INT_IN_RANGE(1,5))

)

rtn = db.val_and_insert.validate_and_insert(aa='test1', bb=2)

self.assertEqual(rtn.id, 1)

#errors should be empty

self.assertEqual(len(rtn.errors.keys()), 0)

#this insert won't pass

rtn = db.val_and_insert.validate_and_insert(bb="a")

#the returned id should be None

self.assertEqual(rtn.id, None)

#an error message should be in rtn.errors.bb

self.assertNotEqual(rtn.errors.bb, None)

#cleanup table

db.val_and_insert.drop()

Ok, time to test it again....

Watch the html report..... nice, we have validate_and_insert tested !!!

Now, go help developers adding tests, as everyone will benefit from them:

- developers adding features are istantly notified that they may have done something wrong, breaking the existing functionality

- users will upgrade knowing that there's no worries because something is tested

And all of this will happen as soon as something gets committed: how wonderful is that !!!

Comments (0)